Will androids write novels about electric sheep? The dream, or nightmare, of totally machine-generated prose seemed to have come one step closer with the recent announcement of an artificial intelligence that could produce, all by itself, plausible news stories or fiction. It was the brainchild of OpenAI – a nonprofit lab backed by Elon Musk and other tech entrepreneurs – which slyly alarmed the literati by announcing that the AI (called GPT2) was too dangerous for them to release into the wild, because it could be employed to create “deepfakes for text”. “Due to our concerns about malicious applications of the technology,” they said, “we are not releasing the trained model.” Are machine-learning entities going to be the new weapons of information terrorism, or will they just put humble midlist novelists out of business?

Let’s first take a step back. AI has been the next big thing for so long that it’s easy to assume “artificial intelligence” now exists. It doesn’t, if by “intelligence” we mean what we sometimes encounter in our fellow humans. GPT2 is just using methods of statistical analysis, trained on huge amounts of human-written text – 40GB of web pages, in this case, that received recommendations from Reddit readers – to predict what ought to come next. This probabilistic approach is how Google Translate works, and also the method behind Gmail’s automatic replies (“OK.” “See you then.” “That’s fine!”) It can be eerily good, but it is not as intelligent as, say, a bee.

Right now, novelists don’t seem to have much to fear. Fed the opening line of George Orwell’s Nineteen Eighty-Four – “It was a bright cold day in April, and the clocks were striking thirteen” – the machine continued the narrative as follows: “I was in my car on my way to a new job in Seattle. I put the gas in, put the key in, and then I let it run. I just imagined what the day would be like. A hundred years from now. In 2045, I was a teacher in some school in a poor part of rural China. I started with Chinese history and history of science.”

This is quite baffling for many reasons. Since the narrator cannot have a job in Seattle and also a job in “some school” in China at the same time, the story must be set in 1945, with the narrator imagining their future educational career “a hundred years from now”, thanks to some unreasonably optimistic expectations about their lifespan. Even in 1945, though, he is driving a car that can be refuelled from the inside (“I was in my car … I put the gas in”) and apparently doesn’t need to be consciously driven (“and then I let it run”). Unless, that is, the story is darker than at first glance and he is running the engine while stationary in his garage.

Did the AI do any better with Jane Austen? The opening phrase of Pride and Prejudice – “It is a truth universally acknowledged” – provoked the machine to gabble on: “that when a nation is in a condition of civilization, that it is in a great measure the business of its leaders to encourage the habits of virtue, and of industry, and of good order among its people.” This does sound rather like some 19th-century political bloviator, even if a slightly broken version. (The second “that” is redundant, and it should read “in great measure” without the indefinite article.)

Is there greater cause to worry further down the literary food chain? There have for a while already been “AI bots” that can, we hear, “write” news stories. All these are, though, are giant automated plagiarism machines that mash together bits of news stories written by human beings. As so often, what is promoted as a magical technological advance depends on appropriating the labour of humans, rendered invisible by AI rhetoric. When a human writer commits plagiarism, that is a serious matter. But when humans get together and write a computer program that commits plagiarism, that is progress.

As a news reporter, GPT2 is, to put it generously, rather Trumpian. Fed the first line of a Brexit story – “Brexit has already cost the UK economy at least £80bn since the EU referendum” – it went on a nutty free-associative spree that warned, among other things: “The UK could lose up to 30% of its top 10 universities in future.” (“Up to 30% of the top 10” is a rather roundabout way of saying maybe three.) Brexit, the machine continued, will push “many of our most talented brains out the country on to campuses in the developing world” (eh?), and replacing “lost international talent from overseas” would, according to “research by Oxford University”, cost “nearly $1 trillion”. To which one can only properly respond: Project Fear! To their credit, the machine’s masters at OpenAI admit that it is sometimes prone to what they call “world-modelling failures”, “eg the model sometimes writes about fires happening underwater”.

The makers’ announcement that this program is too dangerous to be released is excellent PR, then, but hardly persuasive. Such code, OpenAI warns, could be used to “generate misleading news articles”, but there is no shortage of made-up news written by actual humans working for troll factories. The point of the term “deepfakes” is that they are fakes that go deeper than prose, which anyone can fake. Much more dangerous than disinformation clumsily written by a computer are the real “deepfakes” in visual media that respectable researchers are eagerly working on right now. When video of any kind can be generated that is indistinguishable from real documentary evidence – so that a public figure, for example, can be made to say words they never said – then we’ll be in a world of trouble. OpenAI agrees that this is a larger problem, even if its proposed remedy is rather vague. To prevent what it calls “malicious actors” from exploiting such technology, it says, we “should seek to create better technical and non-technical countermeasures”. Arguably this is like engineering a bioweapon and its antidote at the same time, rather than choosing not to invent it in the first place.

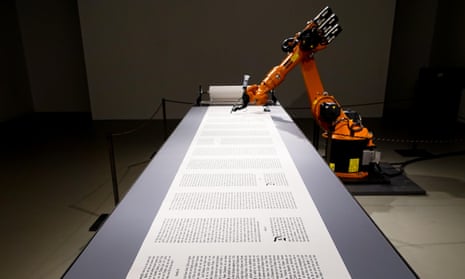

Perhaps a more realistic hope for a text-only program such as GPT2, meanwhile, is simply as a kind of automated amanuensis that can come up with a messy first draft of a tedious business report – or, why not, of an airport thriller about famous symbologists caught up in perilous global conspiracy theories alongside lissome young women half their age. There is, after all, a long history of desperate artists trying rule-based ruses to generate the elusive raw material that they can then edit and polish. The “musical dice game” attributed to Mozart enabled fragments to be combined to generate innumerable different waltzes, while the total serialism of mid-20th‑century music was an algorithmic approach that attempted as far as possible to offload aesthetic judgments by the composer on to a system of mathematical manipulations. More recently, the Koan software developed in the 1990s and used by Brian Eno for his album Generative Music 1 can, if you wish, create an infinite variety of ambient muzaks.

Such a tepid outcome, though, would be disappointing to the serious dystopian sci-fi thinker. Ever since Karel Čapek’s play Rossum’s Universal Robots premiered in 1921, people have wondered whether we will eventually create synthetic life that wipes us out. In particular, the prospect of AI-driven machines taking everyone’s jobs is today a titillating commonplace, even though that way of phrasing it deliberately obscures agency. What we are really talking about is not robots “taking” jobs but employers deliberately firing human beings and replacing them with cheaper machines. Why, for example, is there a rush in the US to create bots that can grade high-school and university essays? Presumably so that fewer teachers need to be hired. No doubt Amazon would lick its lips at the prospect of being able to sell completely computer-generated books (you don’t need to pay royalties to an algorithm), and AI-created conspiracy videos about vaccines would be excellent for YouTube’s bottom line.

How worried we ought to be about such possibilities depends on what we think culture fundamentally is. The excessive hype surrounding the GPT2 text generator, at least, is a symptom that we have to some degree internalised the philistine functionalism of Silicon Valley, according to which everything is simply data. It is true that when you own a huge data-crunching system, everything looks like data. And the mantra that everything is data makes the big tech companies look pretty good, because what they are good at is data. Text can be mathematically encoded and manipulated by a computer, so that’s data too, right?

But writing is not data. It is a means of expression, which implies that you have something to express. A non-sentient computer program has nothing to express, quite apart from the fact that it has no experience of the world to tell it that fires don’t happen underwater. Training it on a vast range of formulaic trash can, to be sure, enable it to reshuffle components and create some more formulaic trash. (Topics “highly represented in the data” of GPT2’s training database were Brexit, Miley Cyrus, and Lord of the Rings.) All well and good. But until robots have rich inner lives and understand the world around them, they won’t be able to tell their own stories. And if one day they could, would we even be able to follow them? As Wittgenstein observed: “If a lion could speak, we would not understand him”. Being a lion in the world is (presumably) so different from being a human in the world that there might be no points of mutual comprehension at all. It’s entirely possible, too, that if a conscious machine could speak, we wouldn’t understand it either.

In the meantime OpenAI has released a limited, less world-threatening version of GPT2 that technically minded users can install on their own computers. I did so, and tremblingly fed the robot the first two sentences of this very article. It continued, in notably defensive mood: “A lot of great fiction has dealt directly with an entirely new kind of robot: those whose lives are not as simple as they might like to think they are, who do not seem to know how to perform tasks that are not so simple but require a little effort and dedication. There can be no substitute for the hard work and perseverance of a robot’s mind, but there is a great deal of room in the literature for stories that might be called novel. [...] In short, every new generation of robots are going to be driven by ambition to make the world a better place, and by those ambitions to make sure they can be made by people as smart as themselves and by machines as well. You might think that these robot minds are the end of humanity when you look closely at their story, but it gets much simpler still. We may still be stuck in a dystopic future where our lives are far from the kind of ones that they were used to. Perhaps it is time we stopped being so self-interested and started taking all our lives in earnest.”

Well, quite. But the enthusiastic frisson with which the press release about GPT2 was greeted tells us something wider about modern times. The prospect of machines taking over may now have become as guiltily desirable as it was always terrifying, in a time when human leaders are doing such a spectacularly bad job. And if citizens increasingly turn to authoritarian leaders – well, what could be more authoritarian than Robocop, or a Terminator? Say what you like about The Terminator’s AI network Skynet, but at least it will make the trains run on time.